Benchmark Your Voice AI

Deepgram surveyed 400 senior leaders on voice AI to map adoption, budgets, and use cases. Compare your voice AI roadmap to $100M+ enterprises and learn where to invest next - human-like agents for customer service, task automation, and order capture - plus benchmarks to guide your 2026 plan.

TL;DR

Every major AI company collects data.

The only question: what happens after you hit send?

Free tools train on your prompts.

Paid plans offer isolation.

Enterprise tiers sell compliance.

Local models keep everything in-house.

The spectrum runs from “public playground” to “private vault.”

And most users have no idea where they are on it.

Why This Matters

Generative AI has become a business partner — in strategy decks, sales docs, legal drafts, and investor memos.

But every question, brainstorm, or upload leaves a trace somewhere in a corporate data lake.

This isn’t paranoia. It’s infrastructure.

Data flows through three layers:

Training exposure — does your input feed future models?

Retention policy — how long are logs stored?

Compliance posture — is it certified, encrypted, and contract-bound?

AI tools differ wildly across these layers. The risk isn’t what you write — it’s where you write it.

Model-by-Model Breakdown

1. OpenAI (ChatGPT, GPT-4, GPT-4o)

Privacy Philosophy: Train first, protect later.

How it actually works:

Free + Plus users: Chats stored and may be used for model training.

Team / Enterprise users: No training, SOC-2 certified, encrypted, and admin-controlled.

Data control: Manual toggle required under “Settings → Data Controls.”

Reality check:

OpenAI’s privacy protection scales with your wallet. The more you pay, the less they use your data.

Free users feed the system. Paid users fence themselves off.

Best for: Power users who understand their risk profile.

Avoid for: Client data, financials, or regulated content.

Privacy Grade: B- (Free) / A- (Enterprise).

2. Anthropic (Claude 3 Family)

Privacy Philosophy: Privacy is structural, not optional.

How it actually works:

Claude never trains on user data.

Temporary logs exist for abuse detection, then deleted automatically.

Claude for Business adds retention policies, SOC-2, and GDPR alignment.

Reality check:

Anthropic built privacy into its model pipeline. Even free users get strong protection.

Enterprise tiers add legal-grade guarantees, not just promises.

Best for: Professionals who need privacy without setup.

Avoid for: Developers seeking custom fine-tuning (Anthropic is intentionally conservative).

Privacy Grade: A+ — gold standard for user trust.

3. Perplexity AI

Privacy Philosophy: Smarter search, softer tracking.

How it actually works:

Free users: queries stored for analytics but not model training.

Pro plan ($20/mo): disables training, anonymizes queries, and adds “Pro Search” privacy mode.

Data still logged for quality improvement, but decoupled from user identity.

Reality check:

Perplexity’s privacy beats traditional search but stops short of full anonymity. Great for research, not for trade secrets.

Best for: Market analysts, researchers, and content teams.

Avoid for: Legal or client-sensitive work.

Privacy Grade: B — effort shows, but analytics remain.

4. xAI (Grok on X)

Privacy Philosophy: Integration over isolation.

How it actually works:

All prompts linked to your X account.

Operates inside X’s ad and engagement ecosystem.

No enterprise plan, no independent privacy policy, and no data firewall.

Reality check:

Grok is designed for conversation, not confidentiality. Its data lives in a social graph, not a secure container.

Best for: Fun, exploration, or trend-following.

Avoid for: Anything serious, sensitive, or strategic.

Privacy Grade: D — visibility by design.

5. Local & Open Models (Mistral, Llama 3, LM Studio, Ollama)

Privacy Philosophy: If it’s on your device, it’s yours.

How it actually works:

Runs entirely offline (no cloud connection).

No logs, no telemetry, no vendor exposure.

Data remains on-device and deletable at any time.

Reality check:

Local LLMs trade polish for privacy. They’re slower and less capable, but completely sealed.

If privacy is your primary goal, nothing beats owning the hardware and weights.

Best for: Developers, R&D teams, security-sensitive operations.

Avoid for: Casual productivity or fast content generation.

Privacy Grade: A+ — true isolation.

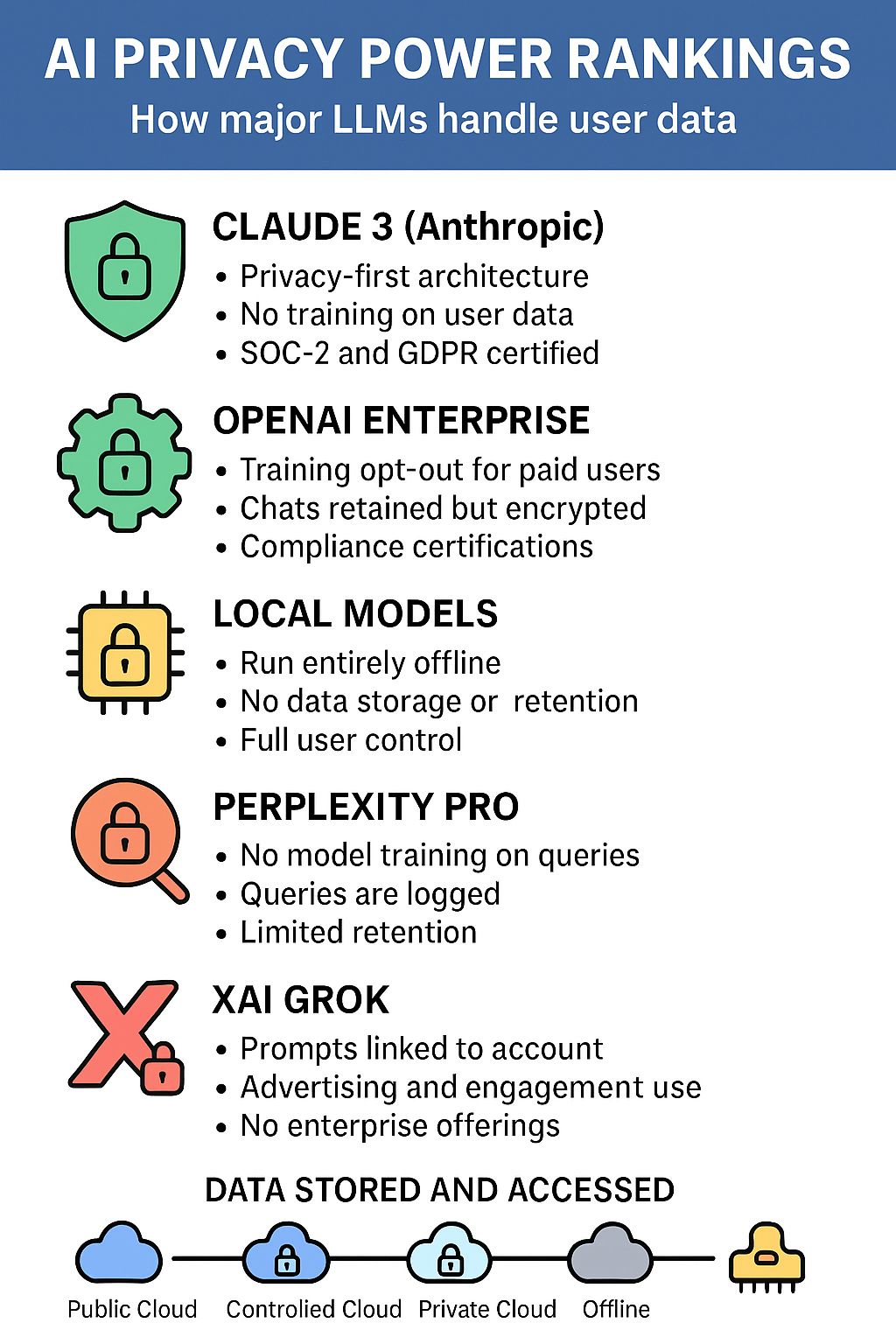

Expert Rankings — The Real Hierarchy

🟢 Claude 3 (Anthropic)

Privacy-first architecture

No training, minimal retention

SOC-2 and GDPR certified

🟢 OpenAI Enterprise

Strong compliance layer

Configurable retention and encryption

Paywall separates public vs. private

🟡 Local Models (Mistral / Llama 3 / Ollama)

Complete isolation

Requires setup and hardware

🟡 Perplexity Pro

Analytics-free but not anonymous

Ideal for information work

🔴 xAI Grok

Public by default

No enterprise pathway

The Takeaway

Privacy in AI isn’t binary — it’s graded.

You’re not choosing between “safe” and “unsafe.”

You’re choosing between who gets to see your data, and when.

Here’s the real equation:

Free Models | Paid Models | Enterprise Models | Local Models |

|---|---|---|---|

You are the dataset. | You rent isolation. | You buy compliance. | You own privacy. |

If your data is your edge — whether creative, corporate, or confidential — privacy isn’t optional. It’s operational.

Visual Architecture — Where Your Data Actually Goes

1. Free Models (Public Cloud)

Your chat → Cloud Server → Stored Logs → Model Training

🧩 Used to improve performance for everyone.

2. Paid Models (Controlled Cloud)

Your chat → Cloud Server → Stored Securely → Model Isolation

🧱 Used by you, but not shared for training.

3. Enterprise Models (Private Cloud)

Your chat → Company-managed container → Encrypted → Audited

🔒 Compliant, contract-bound isolation.

4. Local Models (Offline)

Your chat → Local Device → Temporary Memory → Deleted on Close

⚙️ No storage, no network, no external trace.

How to Talk to AI (Safely and Strategically)

Segment your use.

Use free AI for public ideas, paid AI for productivity, enterprise AI for sensitive data, and local AI for private work.Treat prompts like permanent records.

If you wouldn’t email it to a regulator, don’t paste it into a chatbot.Revisit your settings every quarter.

Providers quietly change retention rules — make data audits part of your workflow.Create an internal “AI Data Map.”

Log which models your team uses, what’s shared, and what’s off-limits.Train for safe prompting.

Teach teams to redact client names, contracts, or financials before using any public model.

ROI Prompts — Use AI to Audit Your AI

1. Privacy Risk Map:

“List every AI tool my company uses and classify by data risk: public, private, enterprise, local.”

2. Policy Builder:

“Write a one-page company AI usage policy separating tools by privacy tier.”

3. Compliance Tracker:

“Summarize which AI vendors are SOC-2 or GDPR certified as of this quarter.”

10 Bonus Prompts

“Summarize each AI company’s latest privacy update.”

“Draft a client-facing statement about how we use AI securely.”

“Build a checklist for safe AI use across departments.”

“Explain AI privacy tiers to non-technical employees.”

“Create a report comparing local vs. cloud AI privacy models.”

“List enterprise-ready AI models with data isolation.”

“Design a workflow for prompt safety in client work.”

“Generate a quarterly AI privacy review checklist.”

“Summarize GDPR rules that apply to AI usage.”

“Write a memo explaining AI privacy in plain language.”

Quick Recap — The Privacy Spectrum

1️⃣ Claude 3 (Anthropic): Privacy-first by design.

2️⃣ OpenAI Enterprise: Pay for control and compliance.

3️⃣ Local Models: 100% isolation, 0% convenience.

4️⃣ Perplexity Pro: Safer search, not secure storage.

5️⃣ xAI Grok: Social data, zero privacy.

Closing Thought

Privacy in AI isn’t a feature — it’s a choice you configure.

If you’re not paying for isolation, you’re paying with data.

The smartest users aren’t paranoid — they’re deliberate

They treat AI like a network, not a diary.

Choose your layer wisely. Then work like someone’s watching — because, in most cases, someone is.

What job will you give ChatGPT first?

Build with LovableFrom Idea to MVP Before LunchIf ChatGPT built apps, it would use Lovable. Describe what you want — Lovable handles design, code, and deployment automatically. You’ll get a live app you can share, test, and grow. • No hiring, no waiting — launch your idea fast • From concept to MVP in hours, not weeks • AI-built, human-ready apps Sponsored by Lovable — the AI platform helping founders ship faster. |

Pro‑Grade Material Weights in SecondsBuilt for contractors, architects, and engineers.

Trusted by Pros Nationwide. |

If you enjoyed this issue and want more like it, subscribe to the newsletter.

Brought to you by Stoneyard.com • Subscribe • Forward • Archive

How was today's edition?

About This Newsletter

AI Super Simplified is where busy professionals learn to use artificial intelligence without the noise, hype, or tech-speak. Each issue unpacks one powerful idea and turns it into something you can put to work right away.

From smarter marketing to faster workflows, we show real ways to save hours, boost results, and make AI a genuine edge — not another buzzword.

Get every new issue at AISuperSimplified.com — free, fast, and focused on what actually moves the needle.